Learn a fast, reliable, and collaborative analysis approach

Due to the sheer volume of interest that product, technical, and commercial teams have in understanding the merchant experience, much of the research conducted at Adyen is done by non-researchers.

In the following article, I’ll share how our Research Insights team works with non-researchers to conduct customer research analysis. I'll shed some light on what I’ve learned along the way, with a particular focus on making it as accessible, efficient, and impactful as possible for non-researchers.

A quick note before we get started: Dovetail has played a big part in making research analysis accessible to non-researchers; it’s now firmly nestled within our process. For that reason, you could find some of the terminology a bit Dovetail-specific, but not to worry—the underlying principles can be applied to analysis using any tool.

Two Brains > One

When analyzing a complex web of user experiences, two brains are generally better than one. On the most impactful research projects, I always bring along a range of key stakeholders for the research scoping, planning, execution, and analysis phases. At least one of those stakeholders also ends up becoming my co-analyzer-in-crime to dive deep into the data we gathered. UX writers, designers, product managers, and other researchers have all stepped up to be analysis sidekicks. Generally, as long as the person has a curious and analytical mindset, they make a great analysis sidekick.

The benefits of collaborative analysis

Analysis goes faster: splitting analysis with someone else means finishing twice as fast

Deeper interpretation: another person to bounce your perspective off of helps build a more multifaceted understanding of the participants’ experience

More accurate analysis leads to stronger evidence: You gain more confidence about applying insights to important business decisions

The costs of collaborative analysis

Coordination check-ins are needed to reduce interpretation error, and these do take time

Create a laundry list of tags

Once the research notes or transcripts are clean and ready to be analyzed, we resist the urge to immediately dive into coding by first stopping to list out all the possible codes or tags that emerged in the research. This prevents us from ending up with an abundance of tags that might not be relevant, or even worse, might have unaligned taxonomy levels. After having coded all the data, the last thing we want is to have to code it all again! Here’s how we do it:

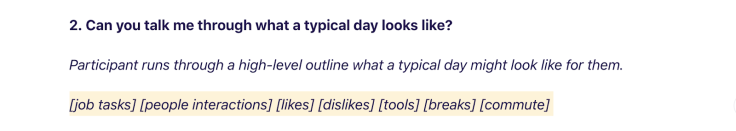

Review the original research question script for inspiration

With my analysis sidekick, we note down words that capture various answers to each research question. These are the categories that become our tags.

We copy our words and paste them into the “tags” section of Dovetail and categorize them into groups (if there are more than 20 unique tags).

Capture extraneous tag topics beyond the script

Review your interview notes (yours and anyone else's) and any debrief notes and write down any other tag ideas you may have missed from reviewing the questions alone

Select broad enough taxonomy levels

Determining the optimal nuance level of a code or tag can be a tricky process. If tags are too broad, then important data and nuance can be missed, resulting in having to create new, more detailed tags and re-tag all the data. If tags are too narrow, we can end up with too many tags (in my experience, more than 50 tags can become unmanageable), and you end up having to spend time combining tags to create broader tags.

An exercise that can help is to think of all possible answers to the research questions and create categories that would accommodate many different answers.

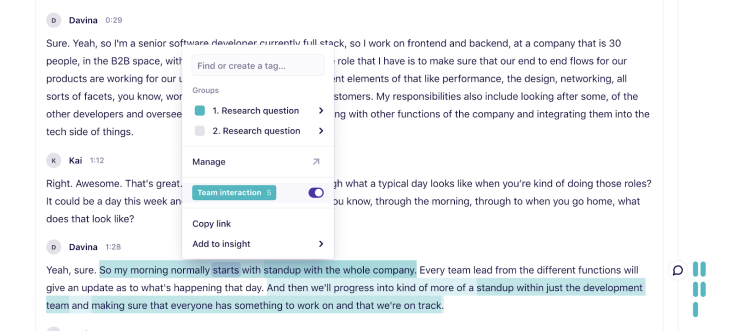

For example, let’s say one of our research questions was to better understand a day in the life of a tech worker. Tech workers would have likely mentioned many different components of their days, such as, “I have a meeting, I get coffee with colleagues, I grab a colleague that’s passing by in the hallway to get their feedback.” Rather than tagging every single component that tech workers mentioned as individual tags (in this case, “meetings”, “coffees”, and “drive-by conversations,” respectively), we could tag them all into the common broader thematic topic of “interactions with colleagues.

The benefit to this broader tag approach is that the nuance of the type of interaction is still captured and can be found by clicking into the tag, but the overarching commonality of interactions with colleagues is not lost. I have found this harmony between retaining nuance but not getting lost in it to be the most efficient way to capture topics accurately and maintain their integrity while still being able to easily compare them with other topics and segmentation criteria later on in the synthesis phase.

Armed with this list of tags, we are ready to start tagging the transcript notes with the confidence that the vast majority of tagging can be completed in just one go.

Synchronize tag interpretation with your analysis sidekick

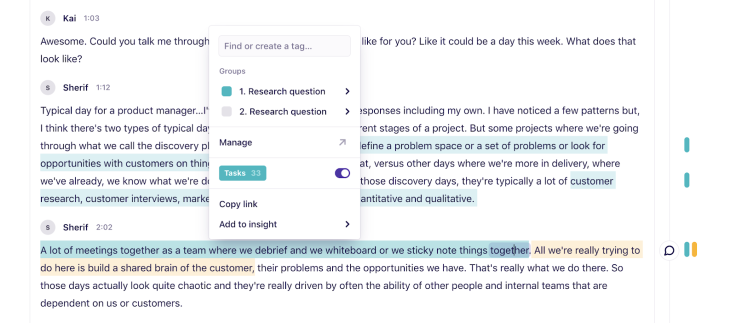

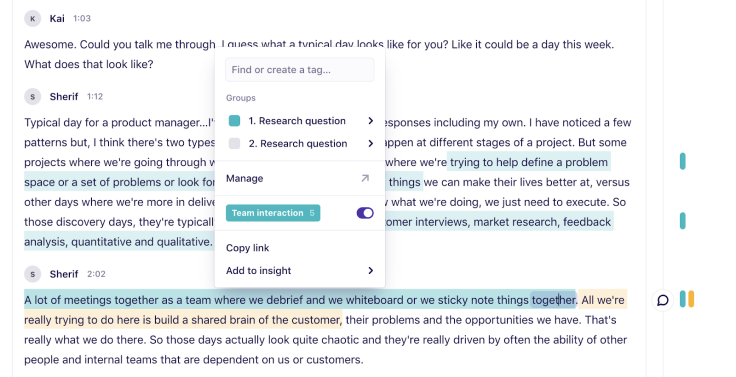

Involving another person in the analysis process can create room for error. Tagging similar data in different ways leads to inaccurate highlight categorization and ultimately makes it difficult to compare these highlights in the synthesis phase. To resolve this, once the laundry list of tags has been created, the researcher and their analysis sidekick need to synchronize on exactly what type of data each tag should cover. Luckily, there’s a shortcut to doing this.

Each analyst selects one transcript note to tag completely using the laundry list of tags created. Selecting two transcript notes that are very different from each other has the added benefit of covering a wider variety of tags to coordinate on

Next, we swap notes and review each others’ tags. This is where we check to see if we would have tagged anything differently than our sidekick

If there is a discrepancy in how we would have tagged a highlight differently, we note the difference in a comment

Once we’ve finished reading through each others’ tags, we discuss any discrepancies and come to an agreement on what topics each tag includes

If there was disagreement on multiple tags, then we try the process again with two other transcript notes

Once there is agreement on tag interpretation, we decide how to split the remaining transcript notes. I like to continue tagging them while on a live video conferencing call with my sidekick. If new tags come up, we add them to our tag list and alert each other so we can go back and re-tag previous notes with the new tags

It can help to coordinate on whether to tag thoughts individually or as part of a paragraph. I prefer tagging each thought or sentence individually as each highlight is separated more granularly in the highlights tab during analysis, meaning I don’t need to click into the tag to read the whole highlight. Others prefer to highlight entire paragraphs as it speeds up the tagging process. Both approaches work!

Some handy tags to remember from project to project

A “great quotes” tag is handy for dumping all compelling user quotes for easy access when creating videos and presentations for insight sharing.

Broad challenges and likes tags reoccur in every project since research interviews consistently include things the participants like and especially dislike. They are handy to combine with other tags when filtering in the analysis stage.

During synthesis, include subject matter experts early and often

Technical topics sometimes require interpretation from technical experts in the field. For example, when research participants referred to specific configurations in our platform, I needed to include our platform product manager to help explain and contextualize what the participants were referring to.

I reel in subject matter experts during the tagging process directly into participant quotes in the transcript notes by @tagging them in comments.

If possible, the subject matter expert can reply to my question directly in the comments, or I can expect an incoming call with an explanation

Tag smarter by combining tag views in the filter

Filtering in the “Highlights” synthesis section of Dovetail allows for combining tags in numerous ways for a more granular perspective. This means that tags don’t need to be super detailed because they can be combined with other tags via a filter. This is why I tend to err on the side of capturing highlights into broader tags (as discussed above).

For example, in the highlights view, to discover all update-related challenges, I create filters for “tag has all of dislikes/challenges + staying updated”. This saves me from needing to create a separate tag for “updating challenges.”

Experiment with insight creation

When analyzing the tags using filters, I like to do two read-throughs of the highlights before creating an “insight” from the perceived patterns. I’ve witnessed two approaches to the insights creation phase:

Approach 1

In the first read-through, I note down any patterns or themes that come up in the highlights

These patterns end up eventually becoming insights

Then I do a second read-through and select the relevant highlights that fell into the patterns I noted down. From the selected highlights, I create an “insight” and add the selected highlights to it

Approach 2

Other analysis sidekicks have preferred to use the Highlights table and add all the highlights in the filtered view to a yet unnamed new insight

While reading through all the highlights within the insight, they delete the highlights that don’t fit into any pattern or theme

Then they title the insight depending on what theme they discovered

Infuse insight creation with another perspective

In a perfect world, the entire tag analysis and synthesis phase could be a live collaborative process with the analysis sidekick. However, other scheduled meetings often make this a pipe dream. An alternative approach is to have daily “stand-ups” with the analysis sidekick at the end of each day to enhance or disqualify each insight with another perspective.

At the start of the insights creation phase, we divide the tags in half and choose which ones we want to analyze (keeping in mind the most relevant tag combinations to analyze in tandem).

Over the coming days, as we analyze our respective sets of tags, we create insights.

We meet at the end of each day for ten to 30 minutes to share the insights we created with each other.

This is an opportunity to add more depth or contest the insights created with additional evidence from a whole other set of tags.

This divide and conquer approach allows us to cut down tag analysis time and infuses an alternative perspective and depth to each insight created. All this gets us closer to preventing bias from seeping into our insights, giving way for more confident decision making, eventually translating into more confidence in qualitative research as a practice.

Collaboration builds empathy for research

Opening up the research process to our non-researcher stakeholders allows them to contribute meaningfully and speeds up an often long and opaque process. But perhaps one of the biggest lessons learned by immersing stakeholders in the messy data synthesis process is that it exposes the complexity that shrouds human behavior and gives new depth to their empathy for the merchants we serve.

I’ve often found this empathy also extends to researchers and fosters new appreciation for our practice.