From IQ tests to design thinking: The complex origins and invisible legacy of brainstorming

Mindfulness.

The little known history of brainstorming reveals a cautionary tale on the dangers of bad data and misused methodologies.

Brainstorming has a bad reputation.

It’s an outdated, naff, insufficiently structured, over-hyped process used only by people who don’t understand real facilitation.

That’s brainstorming’s reputation today. It was also brainstorming’s reputation almost immediately after it was introduced to America in 1953.

By 1963, Alex Osborn—a pioneer in the world of creative thinking methodologies—was describing brainstorming’s application, reputation, and limitations with scorn:

“In the early 1950s, brainstorming became too popular too fast, with the result that it was frequently misused. Too many people jumped at it as a panacea, and turned against it when no miracles resulted.”

Alex Osborn, Applied Imagination (1963 edition)

Osborn was in a unique position to make these comments about brainstorming. He invented it.

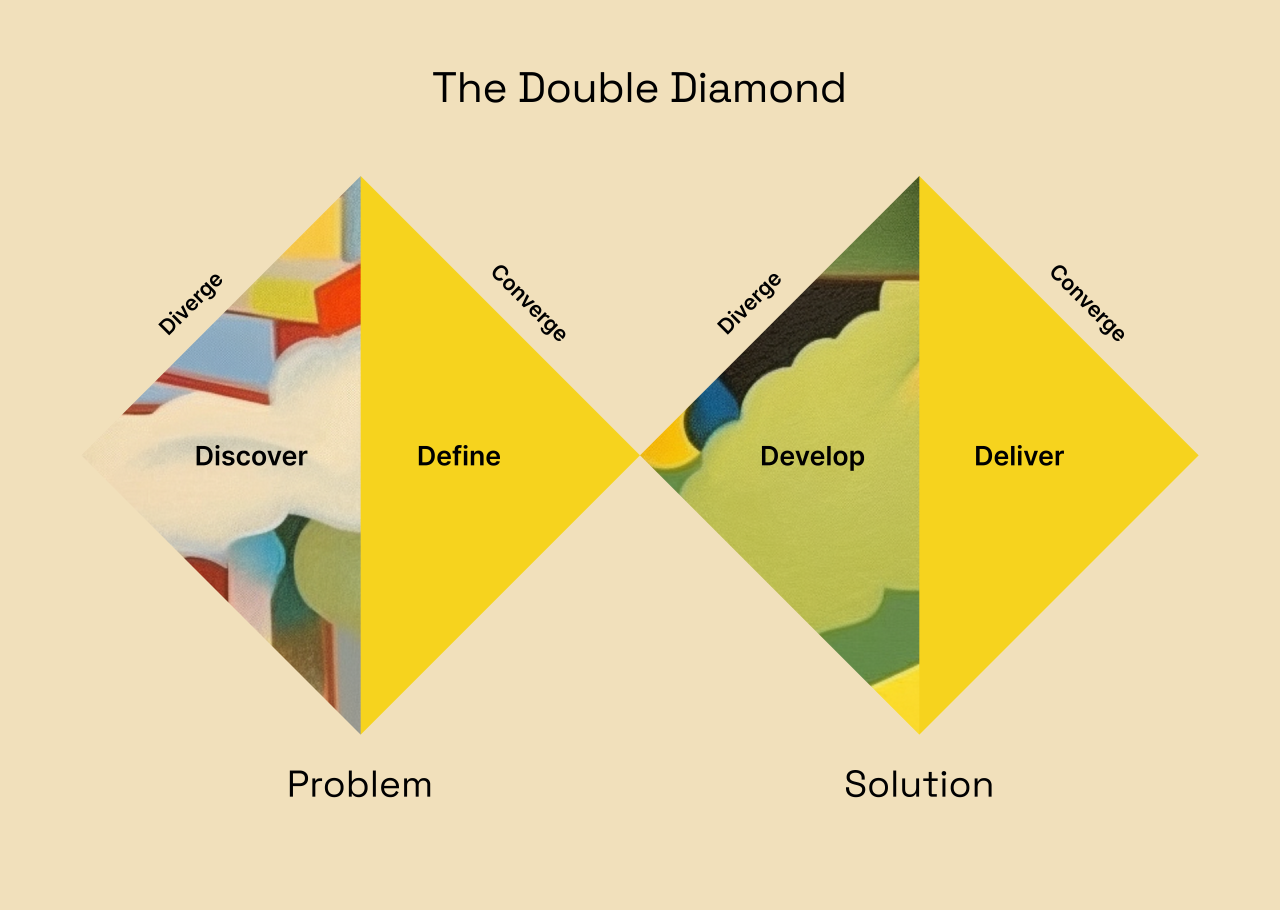

Brainstorming was the most famous creative thinking methodology to emerge from America in the 1950s. The label eventually faded, but the method did not. The foundations of Osborn’s process, which he originally concocted in a desperate attempt to save his failing ad agency, are also the foundations of design thinking, the double diamond, and other innovation methodologies we use today. Methodologies that are still often treated as panaceas and are regularly treated as fundamentally unserious by people who work with things that can be measured—data, metrics, analytics.

There is, after all, no obvious connection between the generative, creative nature of creative thinking and the painstaking work of quantitative research.

Brainstorming and its methodological descendants, however, were born from quantitative research. More specifically, they were born from a method that was itself developed in response to misapplied quantitative research methods and the misuse of data.

Brainstorming as we think of it now—a group of people sitting in a room, coming up with random ideas—is, in fact, only the first step of three in the original brainstorming process. The second step is pausing to let the ideas settle (preferably overnight, according to Osborn), and the third is sorting and prioritizing the ideas with the goal of finding one or two that could solve the problem at hand.

Designers will recognize this process. After all, it mirrors the one we use all the time. The idea generation phase uses divergence, and the prioritization phase uses convergence, the same way the double diamond does. The two main activities of brainstorming are not just aligned to the double diamond process—they share the same root.

The design double diamond.

As adept as he was at showmanship, Osborn never claimed to have invented the labels “diverge” and “converge.” He attributes that insight to a different man: psychologist JP Guilford.

Guilford was a visionary. As early as 1950, he made this prediction:

We hear much these days about the remarkable new thinking machines…We are told that (they will lead to) an industrial revolution that will pale into insignificance the first industrial revolution. The first one made man’s muscles relatively useless; the second one is expected to make man’s brain also relatively useless.

…eventually about the only economic value of brains left would be in the creative thinking of which they are capable.

JP Guilford, Address to the American Psychological Association, 1950.

The promise of a technological revolution did not lead Guilford into creativity research. His original motive was much simpler: he wanted America to win the Second World War.

During the war, Guilford’s theory was that creative thinking was crucial to intelligence and leadership. His wartime role was in developing creativity testing processes for the American Air Force. The tests were a huge success, but Guilford had an advantage: he already knew what wouldn’t work.

That lesson came from an IQ test.

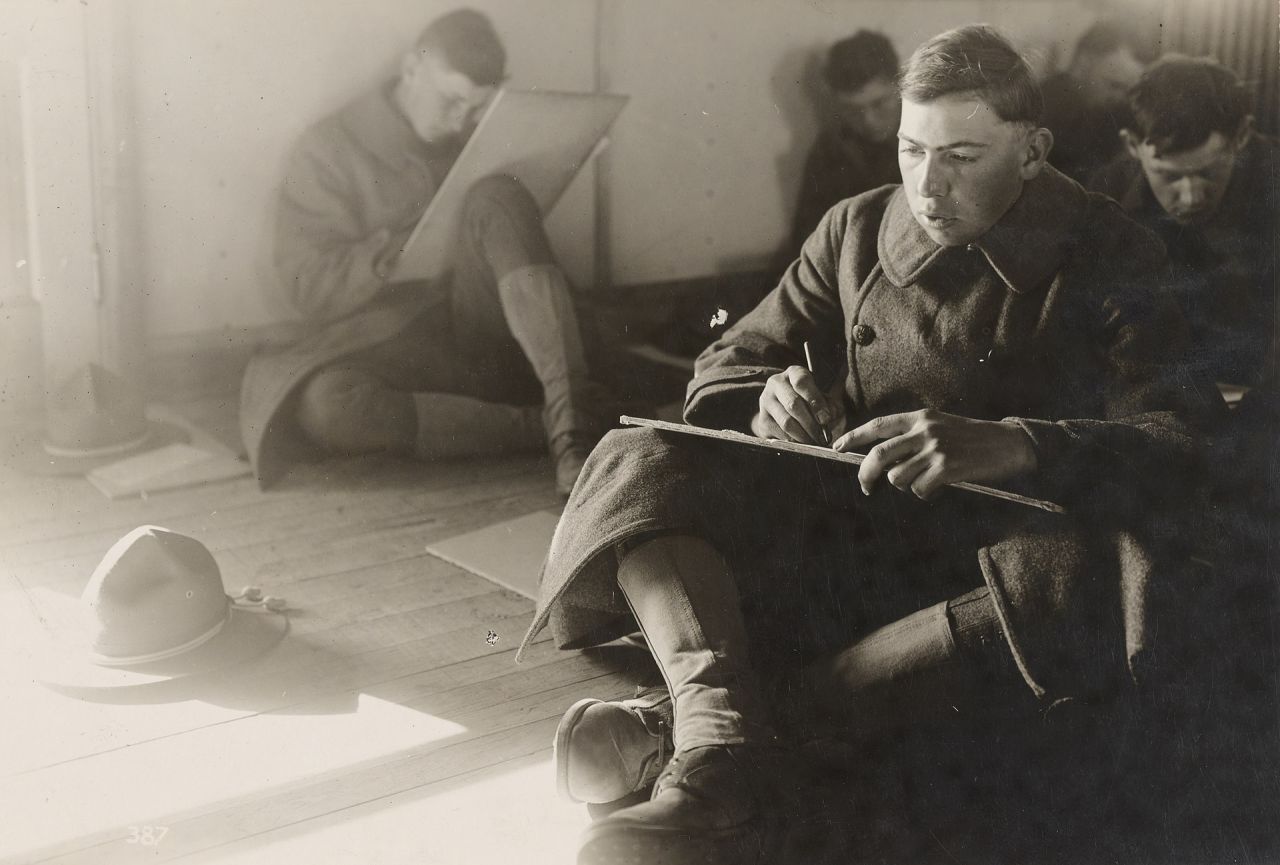

In the final years of the First World War, hundreds of thousands of new soldiers flooded into the US Army. The Army needed a way to classify its capabilities quickly and at a massive scale. To this end, 1.7 million American soldiers were subjected to the “Army Alpha” IQ test.

Army Alpha, and its alternative for illiterate soldiers (Army Beta), was a standardized test that assessed soldiers’ ability to do things like identify patterns, arrange shapes, decode scrambled sentences, and follow instructions. The goal was to evaluate both the soldiers’ intelligence and their leadership potential.

American soldiers taking the Army Alpha Test. Source: US National Archives.

Unsurprisingly, the original IQ tests were never intended to measure leadership potential. What may be surprising is that they were never intended to measure intelligence. The test’s inventor, Alfred Binet, did not even believe that intelligence was a fixed attribute:

“A few modern philosophers assert that an individual’s intelligence is a fixed quantity, a quantity which cannot be increased. We must protest and react against this brutal pessimism…”

Alfred Binet

Binet’s purpose in inventing the IQ test was to identify children at risk of falling behind at school. The test made no assumptions about why a child may need extra support—they may be recovering from illness, or grieving. The IQ test’s only job was to find them.

The Army Alpha test, it turned out, was good at finding people too. It excelled at identifying which soldiers were Black, which were recent immigrants who spoke little or no English, and very little else.

By the time Guilford was designing his tests for assessing creativity in pilots in World War Two, the more immediate failures of the Army Alpha test were well established. To Guilford’s eyes, the fundamental problem with the test was simple: the questions had only one correct answer.

He named the ability to solve problems with only one correct answer “convergent thinking.”

Guilford was interested in more nuanced, less quantifiable problems—the kind that have many possible solutions. His goal was to assess and measure “the kind of intelligence that goes off in different directions.” He called this “divergent thinking.”

If the story of the Army Alpha test ended there, the damage would at least be confined, but it didn’t. The dataset was too large and too tempting. Those 1.7 million test results, the ones that “proved” Black people and immigrants were inferior, became the foundation of “scientific eugenics” in the United States.

In 1923, a book based on the data from the Army Alpha test entered wide circulation. That book, liberally studded with graphs, charts, and authoritative-looking statistics, was called “A Study of American Intelligence.”

According to all evidence available, then, American intelligence is declining, and will proceed with an accelerating rate as the racial admixture becomes more and more extensive. The decline of American intelligence will be more rapid than the decline of the intelligence of European national groups, owing the the presence here of the negro. These are the plain, if somewhat ugly, facts that our study shows.

Carl Brigham, ‘A study of American intelligence’ (1923).

The conclusions of Brigham and his fellow eugenicists influenced American immigration policy for decades to come and American education permanently—he invented the SAT, which is based on the Army Alpha test and is still in use today. (The test’s other great legacy is global: it introduced the A, B, C, and D letter grading system.)

Eugenics’ other great legacy is eugenics itself. Eugenics was popular in mid-century America and other rich English-speaking nations among exactly the same people who it is increasingly popular with today: well-educated rich white people, especially those who work in, or aspire to work in, technology. Today’s eugenicist “pro-natalists,” whose ranks include Elon Musk, are mostly Silicon Valley types. They are as vulnerable to bad conclusions derived from bad data as any of their predecessors and may have even more potential to create mass harm.

Seven years after “A Study of American Intelligence” was released, Brigham admitted that his book ignored the role of English language fluency among recent immigrants and that his earlier conclusions were “without foundation,” but the damage was done. He never retracted his beliefs about the intrinsic inferiority of Black Americans.

The failures that Brigham confessed to were not his only ones. Two distinct beliefs had coagulated in his thinking. These beliefs were shared by the men who implemented and advocated for the Army Alpha test and, eventually, most educated white people across America.

The first, already rejected by Binet, Guilford, most researchers at the time, and all researchers today, was that intelligence is a fixed attribute.

The second, equally wrong but even more pervasive, was that intelligence is quantifiable.

Mere numbers cannot bring out…the intimate essence of the experiment. This conviction comes naturally when one watches a subject at work…What things can happen! What reflections, what remarks, what feelings, or, on the other hand, what blind automatism, what absence of ideas!…The experimenter judges what may be going on in [the subject’s] mind, and certainly feels difficulty in expressing all the oscillations of a thought in a simple, brutal number, which can have only a deceptive precision.

Alfred Binet

Advocates for the Army Alpha test were strongly motivated to believe that intelligence was fixed and quantifiable. If those two things were true, the test results were valid and valuable; if they were not, they weren’t. The researchers had every incentive to maintain both their belief in fixed intelligence and their statistical methods for analyzing IQ test data, despite both being wrong.

That’s not to say that quantitative research is inherently harmful. Guilford, for example, tried with some success to create quantitative measurements for divergent thinking. His most famous creativity test, the “paperclip” test, asked participants to come up with as many uses as possible for a common household item, then scored both the number and originality of the ideas.

The person who is capable of producing a large number of ideas per unit of time, other things being equal, has a greater chance of having significant ideas.

JP Guilford

It was divergent thinking that led a desperate Alex Osborn to invent brainstorming in 1938. It was teaching people how to think in a divergent way that made brainstorming such a success. And so, inadvertently, Osborn’s brainstorming perfectly demonstrated what Binet and Guilford knew and the “scientific eugenicists” were so desperate to deny—that a person’s capacity for creative thinking, and therefore their intelligence, can be improved.

In his brainstorming book, Osborn quotes Guilford on exactly this point:

“Like most behavior, creativity probably represents to some extent many learned skills. There may be limitations set on these skills by heredity, but I am convinced that, through learning, one can extend the skills within those limitations. The least we can do is remove those blocks that are in the way.”

JP Guilford, quoted in ‘Applied imagination’ (1957 edition)

For both men, the path that led them to divergent thinking did not end there. How could it? They were divergent thinkers.

Guilford went on to develop a multifaceted model of intelligence called “The Structure of the Intellect,” and Osborn collaborated with academics to expand his method into a comprehensive approach to creative problem-solving. The last version of that process was called the Osborn Parnes Creative Problem Solving Method. It was a five-step diverge-converge model published in 1967, after Osborn’s death.

The diverge-converge model is, of course, the foundation of the double diamond. The five steps of the Obsorn Parnes method have another legacy: they are the source of the five steps in “design thinking.”

The influence that Guilford, Osborn, and their fellow creativity practitioners have had on innovation methods today is obvious. Less obvious, but perhaps more important for those of us who work in technology, is the lesson of the Army Alpha test.

It shows us that there is no limit to the scale or scope of damage that bad data can do.

It reveals that a research methodology that is valid and useful in one context may be profoundly dangerous in another. The IQ test was well designed for its original purpose of identifying children who needed help at school. It was only when it was used to generate data about attributes that are not fixed and cannot be quantified that it led to catastrophe.

People who work in the ambiguous, creative, divergent-thinking side of innovation are sometimes accused of a lack of rigor, and often with good reason. Some of our processes are over-hyped, and we don’t always deliver good insights or solutions. Eighty years or more of methodological progress, from Guilford’s diverge/converge through Osborn’s brainstorming and eventually into the double diamond and design thinking, still don’t guarantee success.

But it’s also true that the mere fact of being quantifiable does not make research valid and that being data-driven or even data-led can’t guarantee success either. If you use bad data as the foundation for problem-solving, you will always fail to solve the problem. But you might do something much worse, too.

Guilford’s observation that creative thinking is more crucial to leadership than “intelligence” remains true today, as does Osborn’s insight that generating ideas is best done separately from refining them.

Also true, and a source of great hope, is something the evidence leaves no ambiguity about: all of us can get better at creative problem solving—including the problems we ourselves had a hand in creating.

To live is to have problems and to solve problems is to grow intellectually.

JP Guilford

Subscribe to Outlier

Juicy, inspiring content for product-obsessed people. Brought to you by Dovetail.