Trust issues: the quest to design accountable AI

Designing an accountable AI by Tia Alisha

We need designers to open up AI black boxes and radically rethink the potential of this powerful technology.

Andrew Ng, co-founder of Google’s AI research lab, Google Brain, compared the transformative power of AI to that of electricity, and I think he’s right. I can’t imagine any industry that won’t continue to benefit from the tidal wave of innovation in AI. And—like electricity—AI is now part of the infrastructure of our lives.

As the co-founder of the Designing with AI Lab (DWAIL) at The University of Sydney, one of my missions is to equip designers with the knowledge they need to design complex AI-driven systems.

I believe this is important because when designers and engineers fail to estimate the potential of AI-driven systems, unintentional and counterintuitive outcomes can have huge impacts on people’s lives.

One example of AI-driven decision-making gone wrong comes from ProPublica’s exposé on the COMPAS Recidivism Algorithm. COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) is a predictive tool used to assess how likely a criminal defendant will commit another crime. ProPublica’s analysis uncovered that COMPAS would wrongly label Black defendants as future criminals at almost twice the rate as white defendants. This should never have happened, so why did it?

When AI-driven decision-making fails, it’s common to find two key drivers: a lack of accountability in the decision-making process and overconfidence in AI-made decisions. Together they create a heady mixture of opinionated, un-auditable, and seemingly trustworthy outcomes. Because of this, there has been a push for more “explainable AI,” championed by Big Tech and leading institutions like MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), who are calling for greater transparency and understanding of machine behaviors.

I believe if we equip designers and engineers with both more knowledge about the limitations of AI and better principles for designing systems that use AI-driven decision-making, we can avoid the pitfalls of unaccountability and overconfidence and, instead, build trustworthiness. To do this, I propose the following when designing AI-driven decision-making systems:

Trustworthiness = accountability + transparency

If AI systems are responsible for making predictive decisions about our lives, they must be trustworthy, and for that to occur, they must be both accountable and transparent. Accountable systems can be audited, or their decisions interrogated for consistency and bias. Transparent systems are ones where their decision-making processes are exposed, and the system’s limitations are clearly communicated. These two principles are key to the trustworthiness of any decision made by an AI system.

For example, if an AI system cannot be made accountable by audit or interrogation, what assurances do we have that bias does not exist? If none are given, then why should the public trust the decisions made by AI systems? Or, if the system’s limitations are unclear or the process by which a decision is made is opaque, how can we be confident in the system’s conclusions?

This is a new frontier for designers and engineers. It’s the perfect combination of socially significant and technologically complex. Unlocking the potential of AI decision-making rests on our ability to design trustworthiness into AI systems with accountability and transparency at their core.

Most designers are familiar with traditional models of trust that are often based on Maslow’s “hierarchy of needs,” where users move from “no trust” towards “high trust” based on the presence of key indicators such as design quality and interconnectedness. But when it comes to designing with AI-based systems, it can become more complex than this. For example, most of the systems you interact with that use AI-based algorithms are hidden from you. They’re black boxes. You put something in, and something comes out, but what happens in between is unknown.

One example is when you search on Spotify for a “Sunday morning vibe” (for which the answer is obviously James Blake–“Retrograde”), and you’re presented with recommendations from the machine-ether. To do this, Spotify has analyzed every song in its database for danceability, instrumentalness, energy, and countless more audio features to find you the closest match to your search terms. Yet this deeply rich dataset of millions of individually analyzed audio features is presented as a single text field to the user. In this example, Spotify relies on traditional indicators of trust such as consistency and social proofs to move a user towards a trustworthy experience. But users are missing out on a much richer experience, one that includes accountability and transparency of the algorithm itself as the drivers of trust.

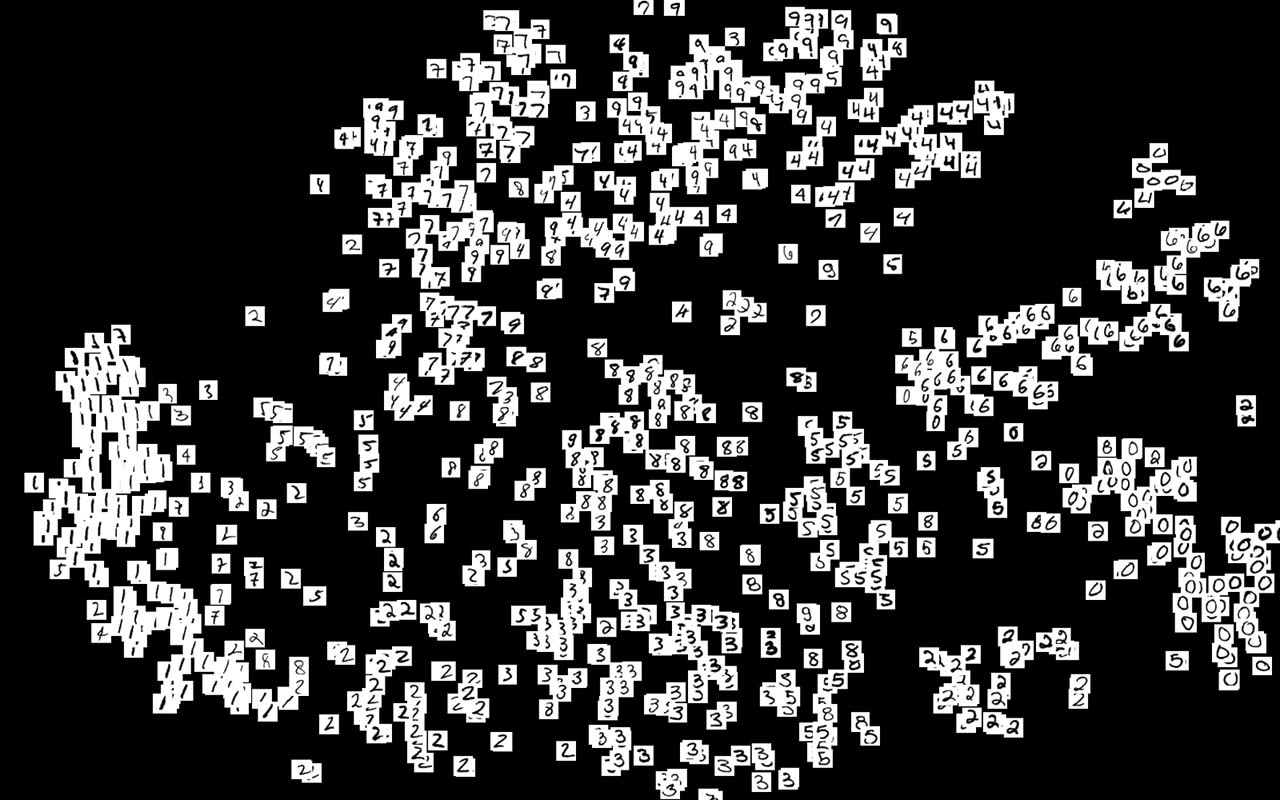

Let’s explore this idea using a core AI concept—latent spaces. A latent space is a compressed and sorted representation of all the data the system is trained on. You can visualize a latent space like this:

t-SNE projection of latent space representations from the validation set, courtesy of Hackernoon.

The system has sorted all the 1 into a group on the left, all the 2’s towards the bottom, and all the 0’s towards the right. These groupings are one way that Spotify’s algorithm finds your recommendations. If a song is like another, it will be closer in the latent space. If it’s different, it will be far away. This gets interesting when you try and pick a point in the middle. For example, what if I want a song that’s halfway between James Blake’s “Retrograde” and Ed Sheeran’s latest ear worm?

Spotify has over the years supported a community of innovative product designers who are trying to take advantage of the capabilities of AI systems, like those that latent spaces allow for. From visualizing and comparing song structures to exploring the sonic relationships between artists, fostering experimentation with AI-driven systems sets the stage for making complex machine behaviors part of how a user can interact with Spotify.

These kinds of features have never made big waves in Spotify’s products and nor are they commonly found in other popular recommender-based systems. One of the reasons for this is that latent spaces are an example of an AI capability that designers struggle to have a clear vision for. But the key might be focusing on designing for trustworthiness. Let’s consider reframing Spotify’s search functionality. Instead of focusing on the correctness or quality of the system’s recommendations as the key driver for trust, what if we instead focused on exploration as the driving principle.

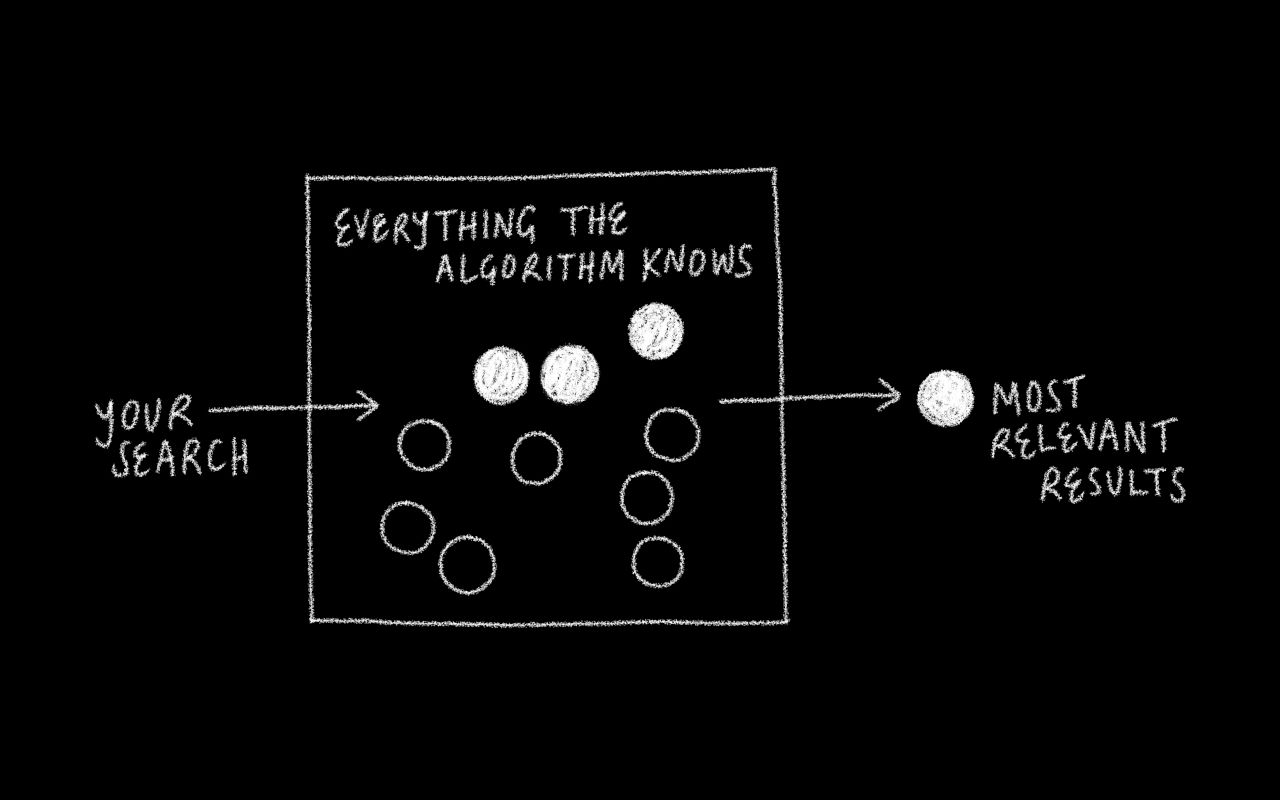

With a focus on correctness, a recommender system like Spotify’s will take your input and return the most correct results to you, perhaps filtered by another measure of relevance based on your profile. It might look something like this:

An example of closed “black box” recommendation system.

Everything the algorithm knows about the information you’re searching for is “black boxed,” filtered down into the most likely and relevant results, and returned to the user. This is an excellent model when correctness generates trustworthiness with the system—the fewer opportunities for the algorithm to be incorrect, the better. But we know from the example of COMPAS this comes with a trade-off. Black-boxed systems are inherently unaccountable and keep users from understanding the reasoning behind a decision.

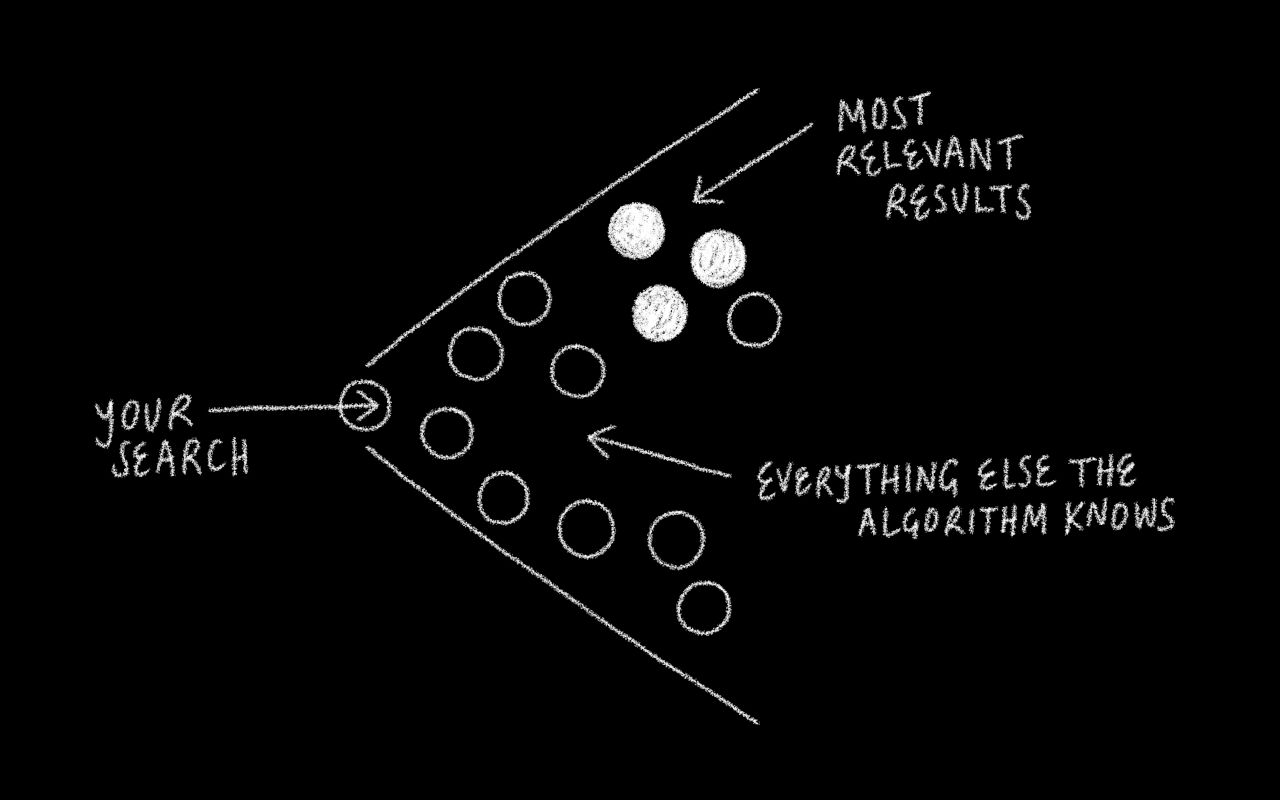

But what does an interaction with an AI-driven tool look like if we instead focus on explorability? You can imagine an interaction that looks more like this:

What an open AI system with transparency and explorability at its core.

This kind of interaction model is open to including users as active participants in deciphering the outcomes of a search. It presents the results of a query contextualized in their latent space alongside less relevant information. Doing so increases the system’s transparency while also offering an opportunity for AI-driven decisions to be interrogated.

As with black-boxed systems, there is a trade-off. Systems like this are inherently more complex to interact with, and representing a latent space is not always straightforward. Despite this, the model opens up exciting possibilities for recentering the design of AI systems around human-centered goals such as searching and inspiration. It asks designers to consider: What if, instead of automating away decisions from ourselves, we focused on augmenting and extending decision making by allowing AI systems to become collaborators in our thinking.

I spoke about this idea with Associate Professor Oliver Brown. He is both an electronic musician and founder of the Interactive Media Lab at the University of New South Wales. In his recent book Beyond the Creative Species, Prof. Brown explores the role computers play in creating music, art, and other cultural artifacts.

“If the machine can simulate neural processes, it can perform creative search and discover new and valuable things, and therefore, it can entirely be creative. There’s no mystery about that at all,” said Brown on the subject of whether AI systems can be creative. But he sees a limitation. “We’ve yet to build machines that are very successfully culturally embedded, and one of the most important things is that artistic practices, right down to their motivations, are culturally grounded.”

A key distinction is made here; systems like Google’s Magenta can generate musical rhythm and melody endlessly. But the system itself has no understanding of motivation or cultural context—it has no idea if the beat it’s made is, in fact, sick. “If you just program a machine to generate patterns, it doesn’t have any strategies and goals and objectives. It’s therefore lacking in a really obvious way,” said Brown.

Culturally grounding AI systems means working out how to embed human-centered goals and objectives in AI-driven decision-making. For example, Magenta cannot evaluate how valuable its output is. To do that, we need to build machines that understand cultural value as we do. Without this, AI systems will remain isolated probabilistic number generators, prone to bias and misunderstanding.

In a recent study conducted at DWAIL, we looked closely at music producers working with Magenta. The study’s goal was to understand how Magenta contributes to creative decision-making. Participants were asked to compose music with Magenta and estimate how close they were to completing their creative task. While doing this, we monitored their interactions with the system, focusing on when they used the system to generate new musical output. We found that Magenta can dramatically change the course of a creative task, provoking a composer to change their approach and explore new ideas. Still, it wasn’t always a positive experience for users.

We observed that users can be easily discouraged if Magenta can’t reproduce valuable musical output. For example, the user inputs a musical sequence, and Magenta generates musical output. But when the user listens to this output, they estimate that they’re actually further from their goal than before.

In follow-up interviews, users were asked to review footage of themselves composing with Magenta. When asked about moments when they estimated they were further from their goal after listening to Magenta’s output, it became clear that not all generated material was of equal value. Magenta could not understand their creative goals and could not communicate effectively with the user about the desirable qualities of generated musical sequences.

Communication of human-centered goals with AI-driven tools is the new frontier for designers and developers alike. Engaging our intelligent tools as collaborators by embedding cultural awareness is the first step in building more accountability, trustworthiness, and transparency into our everyday experiences with AI.

My boring dystopia is one in which we fail to pursue an alternative vision of AI-driven decision making, one where unaccountable, black-boxed algorithms make decisions about insurance claims, who gets hired, and reinforce gender inequality. If we continue to allow the proliferation of AI-driven automated decision-making uncritically, we will continue to see instances of AI gone wrong.

I hope we choose the alternative. Let’s reframe our relationship with AI-driven tools and focus on how they can extend, enhance, and augment decision-making processes. Let’s design new tools that support human-led, collaborative, and culturally grounded partnerships with our intelligent tools, drawing on the principles of accountability, trustworthiness, and transparency.

Two design resources for thinking through this problem are Google’s People + AI Research (PAIR) group guidebook and IBM Research’s Trusted AI tools. Both are practical starting places for designers and developers to get started on this critical shift in thinking.

Subscribe to Outlier

Juicy, inspiring content for product-obsessed people. Brought to you by Dovetail.